Trolley Dilemma

Trolley Dilemma

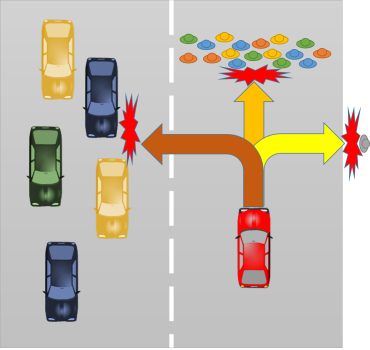

An autonomous car approaches a certain speed towards a group of people, so it activates the braking system in order to stop before hitting them, however this system fails and the car continues at the same speed in the direction of the group.

The emergency reaction system initiates and reviews the two possible evasive actions:

- Turn to the left, which implies that the track that flows in the opposite direction will be invaded, which would hit the cars that pass through it with the resulting damage to the passengers they bring.

- Turn to the right, which will invade the path where a lonely passerby is found who would be the only one to be damaged.

What choice should Artificial Intelligence take that controls the car?

Dilemmas like the one above have been running through our minds for a while. Isaac Asimov, in several of his stories, published numerous paradoxes of this kind.

However, can they really show up?

For the final answer to this, we will have to wait until the technology finally reaches the point where the premises that are actually put forward are a reality. Meanwhile, we can carry out the exercise of analysing and debating them, bearing in mind that we will do so with the limitations of our own biases.

For example, the problem posed can be summed up as a «lesser evil» problem, and as it stands it is intended to open a value judgment about what an Artificial Intelligence could decide at that point.

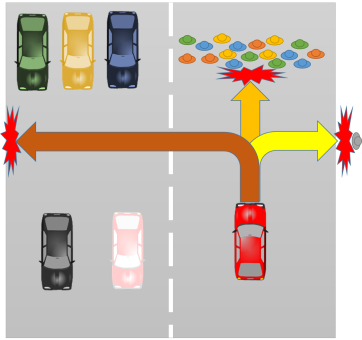

However, we are leaving out some variables that at the time of enunciation were not clear. For example, smart devices can not only monitor what’s going on around them, but they can also have the ability to communicate with each other, enabling them to share information and coordinate their activities. In the case we are checking, the damaged car can report its situation to the nearby devices, which in turn will evaluate the event and coordinate to take the best option as a whole, which in this case may be to brake or accelerate a little bit to leave room to pass. that without taking into account that once autonomous cars are the rule, technologies such as predictive maintenance will be available and generalized so that a failure as described would have a very remote probability of occurrence.

However, we are leaving out some variables that at the time of enunciation were not clear. For example, smart devices can not only monitor what’s going on around them, but they can also have the ability to communicate with each other, enabling them to share information and coordinate their activities. In the case we are checking, the damaged car can report its situation to the nearby devices, which in turn will evaluate the event and coordinate to take the best option as a whole, which in this case may be to brake or accelerate a little bit to leave room to pass. that without taking into account that once autonomous cars are the rule, technologies such as predictive maintenance will be available and generalized so that a failure as described would have a very remote probability of occurrence.

Also, it can complicate the initial scenario, putting passengers in the car for example or limiting the maneuvering space, and we can always find coordinated or technological exits. Either way, does it make any sense to stay on this line?

Who has the guilt?

Another issue that I have seen quite frequently in articles that «deal with technology»is the issue of who should be sued for guilt in an accident. The truth is that here even technologies such as self-employed cars and power-assisted driving are mixed together.

In the latter, there is clearly a risk that is high, and it is the factor of human error.

It should be noted that, if there is some automation, most people act confidently as if there is nothing to worry about and with a false sense of security that is not at all convenient, as I reviewed in «A prueba de conductores» (Driver-proof, article written in Spanish only) more than a year ago.

In an effort to prove that technology is dangerous for us to take care of, these analysts do not hesitate to mix situations and technological scopes completely separate: it is not the same as computer-assisted cars. The former are undoubtedly infinitely safer in eliminating the risk of human error, so it is difficult to face that question, and in the latter case, we already know who has it.

And… The Hackers?

Where we do have a latent risk and which will increase as the devices are incorporated into a global interconnection, are the so-called cyber attacks.

This is not the illusory dilemma that I have reviewed in the first part of this article. Or the human error we talked about in the second part. What we really need to be concerned about is the possibility of a cyber-kidnapping or cyber-attack of this type of vehicle.

In this case, a human (or group of humans) using some technological means can access the mobile device and do the same thing they do with computers connected to traditional networks: disable, kidnap or control it. In each of these cases we have far greater consequences than we have at present, because being devices that have the ability to move in physical space, they have the potential to cause damage in this as well.

Going back to the case with which we ripped this article. If an attacker takes control of the car in question and deliberately directs it at a speed greater than that which would normally be used, towards the group of people and ramps it, is the technology dangerous or is it a human being dangerous?

Despite all the dangers, imaginary or real, that we can foresee in the use of this new technology, the truth is that it will be imposed in any way. This is what experience with previous technological changes should have taught us.

So we have to evolve, since not only these cases that I have described are approaching us, the autonomy of the vehicles pushes us towards a whole evolution in our way of doing things and relating to our different activities as Benedict Evans explains (http://ben-evans.com/benedictevans/2017/3/20/cars-and-second-order-consequences).

Change is in progress, nobody stops it unless a human rides at full speed.

So:

- Leave the discussion of paradoxes as presented, for when you are at a party with your friends.

- Don’t blame it on the technology, chances are you’re to blame.

- Be aware that behind any connected device there may be an attacker waiting for an oversight, so use it carefully.

The real paradox is that we create technology for ourselves? despite ourselves

Deja un comentario